In Part One of this series, I sampled the insights of W. Somerset Maugham (English, 1874-1965), a prolific and popular playwright, novelist, short-story writer, and author of non-fiction works. I chose to begin with Maugham — in particular, with excerpts of his memoir, The Summing Up — because of his unquestioned success as a writer and his candid assessment of writers, himself included.

Maugham’s advice to “write lucidly, simply, euphoniously and yet with liveliness” is well-supported by examples and analysis. But Maugham focuses on literary fiction and does not delve the mechanics of non-fiction writing. Thus this post, which distills lessons learned in my 51 years as a writer, critic, and publisher of non-fiction material, much of it technical.

THE FIRST DRAFT

1. Decide — before you begin to write — on your main point and your purpose for making it.

Can you state your main point in a sentence? If you can’t, you’re not ready to write, unless writing is (for you) a form of therapy or catharsis. If it is, record your thoughts in a private journal and spare the serious readers of the world.

Your purpose may be descriptive, explanatory, or persuasive. An economist may, for example, begin an article by describing the state of the economy, as measured by Gross Domestic Product (GDP). He may then explain that the rate of growth in GDP has receded since the end of World War II, because of greater government spending and the cumulative effect of regulatory activity. He is then poised to make a case for less spending and for the cancellation of regulations that impede economic growth.

2. Avoid wandering from your main point and purpose; use an outline.

You can get by with a bare outline, unless you’re writing a book, a manual, or a long article. Fill the outline as you go. Change the outline if you see that you’ve omitted a step or put some steps in the wrong order. But always work to an outline, however sketchy and malleable it may be.

3. Start by writing an introductory paragraph that summarizes your “story line.”

The introductory paragraph in a news story is known as “the lead” or “the lede” (a spelling that’s meant to convey the correct pronunciation). A classic lead gives the reader the who, what, why, when, where, and how of the story. As noted in Wikipedia, leads aren’t just for journalists:

Leads in essays summarize the outline of the argument and conclusion that follows in the main body of the essay. Encyclopedia leads tend to define the subject matter as well as emphasize the interesting points of the article. Features and general articles in magazines tend to be somewhere between journalistic and encyclopedian in style and often lack a distinct lead paragraph entirely. Leads or introductions in books vary enormously in length, intent and content.

Think of the lead as a target toward which you aim your writing. You should begin your first draft with a lead, even if you later decide to eliminate or radically prune the lead.

4. Lay out a straight path for the reader.

You needn’t fill your outline sequentially, but the outline should trace a linear progression from statement of purpose to conclusion or call for action. Trackbacks and detours can be effective literary devices in the hands of a skilled writer of fiction. But you’re not writing fiction, let alone mystery fiction. So just proceed in a straight line, from beginning to end.

Quips, asides, and anecdotes should be used sparingly, and only if they reinforce your message and don’t distract the reader’s attention from it.

5. Know your audience, and write for it.

I aim at readers who can grasp complex concepts and detailed arguments. But if you’re writing something like a policy manual for employees at all levels of your company, you’ll want to keep it simple and well-marked: short words, short sentences, short paragraphs, numbered sections and sub-sections, and so on.

6. Facts are your friends — unless you’re trying to sell a lie, of course.

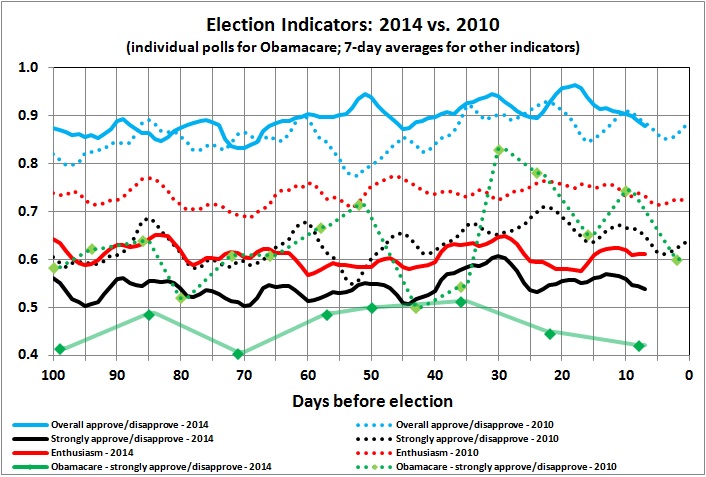

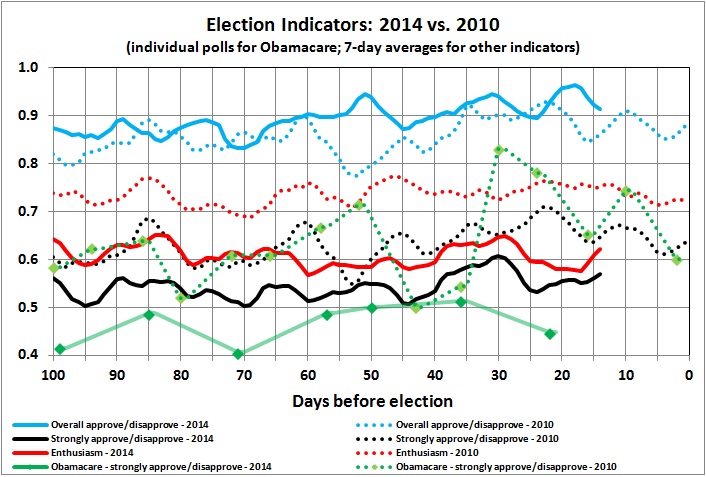

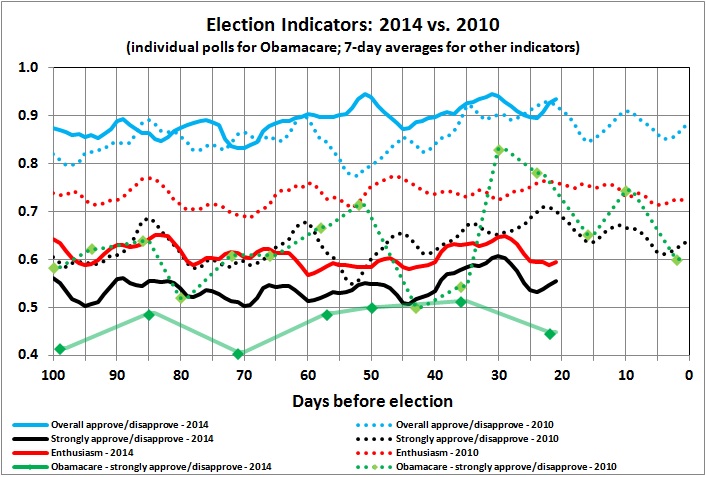

Unsupported generalities will defeat your purpose, unless you’re writing for a gullible, uneducated audience. Give concrete examples and cite authoritative references. If your work is technical, show your data and calculations, even if you must put the details in footnotes or appendices to avoid interrupting the flow of your argument. Supplement your words with tables and graphs, if possible, but make them as simple as you can without distorting the underlying facts.

7. Momentum is your best friend.

Write a first draft quickly, even if you must leave holes to be filled later. I’ve always found it easier to polish a rough draft that spans the entire outline than to work from a well-honed but unaccompanied introductory section.

FROM FIRST DRAFT TO FINAL VERSION

8. Your first draft is only that — a draft.

Unless you’re a prodigy, you’ll have to do some polishing (probably a lot) before you have something that a reader can follow with ease.

9. Where to begin? Stand back and look at the big picture.

Is your “story line” clear? Are your points logically connected? Have you omitted key steps or important facts? If you find problems, fix them before you start nit-picking your grammar, syntax, and usage.

10. Nit-picking is important.

Errors of grammar, syntax, and usage can (and probably will) undermine your credibility. Thus, for example, subject and verb must agree (“he says” not “he say”); number must be handled correctly (“there are two” not “there is two”); tense must make sense (“the shirt shrank” not “the shirt shrunk”); usage must be correct (“its” is the possessive pronoun, “it’s” is the contraction for “it is”).

11. Critics are necessary, even if not mandatory.

Unless you’re a first-rate editor and objective self-critic, steps 9 and 10 should be handed off to another person or persons — even if you’re an independent writer without a boss or editor to look over your shoulder. If your work must be reviewed by a boss or editor, count yourself lucky. Your boss is responsible for the quality of your work; he therefore has a good reason to make it better. If your editor isn’t qualified to do substantive editing (step 9), he can at least nit-pick with authority (step 10).

12. Accept criticism gratefully and graciously.

Bad writers don’t, which is why they remain bad writers. Yes, you should reject (or fight against) changes and suggestions if they are clearly wrong, and if you can show that they’re wrong. But if your critic tells you that your logic is muddled, your facts are inapt, and your writing stinks (in so many words), chances are that your critic is right. And you’ll know that your critic is dead right if your defense (perhaps unvoiced) is “That’s just my style of writing.”

13. What if you’re an independent writer and have no one to turn to?

Be your own worst critic. Let your first draft sit for a day or two before you return to it. Then look at it as if you’d never seen it before, as if someone else had written it. Ask yourself if it makes sense, if every key point is well-supported, and if key points are missing, Look for glaring errors in grammar, syntax, and usage. (I’ll list some useful reference works in Part Three.) If you can’t find any problems, you shouldn’t be a self-critic — and you’re probably a terrible writer.

14. How many times should you revise your work before it’s published?

That depends, of course, on the presence or absence of a deadline. The deadline may be a formal one, geared to a production schedule. Or it may be an informal but real one, driven by current events (e.g., the need to assess a new economics text while it’s in the news). But even without a deadline, two revisions of a rough draft should be enough. A piece that’s rewritten several times can lose its (possessive pronoun) edge. And unless you’re a one-work wonder, or an amateur with time to spare, every rewrite represents a forgone opportunity to begin a new work.

* * *

If you act on this advice you’ll become a better writer. But be patient with yourself. Improvement takes time, and perfection never arrives.

I welcome your comments, structural or nit-picking as they may be.